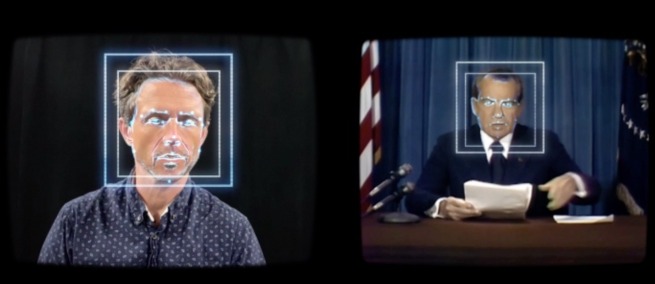

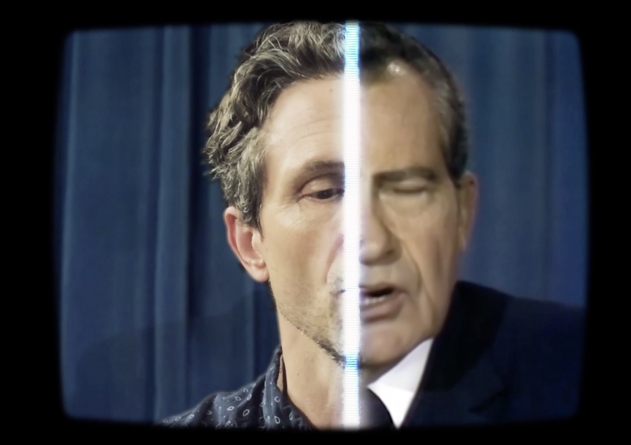

On view at Museum of the Moving Image from December 18, 2021 through May 15, 2022, Deepfake: Unstable Evidence on Screen is an exhibition exploring the historical and contemporary implications of misinformation conveyed by moving image media. The exhibition is centered on a contemporary artwork, In Event of Moon Disaster, by multimedia artist and journalist Francesca Panetta and sound artist and technologist Halsey Burgund. Created using deepfake technology powered by machine learning, the work features a broadcast of Richard Nixon sadly informing the world that the 1969 Apollo 11 mission failed. In Event of Moon Disaster, which made its world premiere at the 2019 International Documentary Film Festival of Amsterdam (IDFA), won a 2021 Emmy Award for Outstanding Interactive Media: Documentary. We spoke with Panetta and Burgund about the history of media manipulation and their current concerns, as well as creative decisions that went into the artwork.

Science & Film: What sparked your collaboration?

Francesca Panetta: I had moved to Boston in 2018 for a fellowship at the Nieman Foundation for Journalism at Harvard. I’d met Halsey at MIT when I was presenting at the MIT Open Documentary Lab where he is a Fellow. Our practices are quite similar, so we became friends quickly, and we struck up regular brainstorming sessions with two other journalists. They were considered journalists—people worried about misinformation and deepfakes, and Halsey and I as creative practitioners were as well. It was the 50th anniversary of the Moon landing and we were talking about deepfakes and one of us was like, there was that speech written by Bill Safire for Nixon and never used. Both Halsey and I were really familiar with the text and knew how beautiful the writing was. It was quite a solid idea from there: let’s bring that speech to life. We talked to IDFA [the International Documentary Film Festival of Amsterdam] about it, got some funding from Mozilla, then I moved to MIT where I worked on this as my job.

Halsey Burgund: I credit the never-ending cookie jar at the Nieman Foundation where we had those brainstorming sessions that fueled a lot of the creativity. But in all seriousness, it was this wonderfully fun confluence of people with different approaches, from different backgrounds, and we’d get together on Friday afternoons after a long week and talk about what’s going on in the world. As Fran said, it was one of those generative moments when something pops out and then it solidifies. The piece has taken on different conceptual forms, but the idea of creating an alternative history using very modern technology on an event of 50 years ago has always been core to the project, with the hope of helping to warn people about some of the dangers of this technology.

Image courtesy: MIT and Halsey Burgund. Photo credit: Dominic Smith.

S&F: In the exhibition, your piece is placed within some context about the history media manipulation. What were your main references in terms of historical precedent for In Event of Moon Disaster?

FP: We talked about Moon conspiracy theories quite a lot, perhaps less about the history of misinformation though we are very aware of it. We spent a lot of time thinking about the different ways the Moon landing, or the non-Moon landing, has been re-told. We did a lot of work looking at contemporary deepfakes, and the landscape of cheap face-swapping apps, and we talked to a lot of misinformation experts trying to figure out how this piece fits in [to that history] and how it’s useful. That helped in our framing and conceptualization. We talked to people at Harvard, MIT, and further afield about what work was being done in detecting deepfakes.

HB: What I was really excited about in terms of context was how we could use a deepfake in a different or unique way. Ninety-five percent of them that are non-consensual porn videos, and the others that get out are very comedic, or very much about demonstrating the technology. As artists, we were excited to use a deepfake as a culmination of this creation of an entire alternative history that we were contemplating might have happened, with the bridge to that being this real speech [that was written for Nixon]. Our piece is much less a demonstration of the technology than a broader view of how manipulations, when embedded into things that are otherwise true (real archival footage), can cause people to believe things that aren’t true.

Image courtesy: MIT and Halsey Burgund. Photo credit: Francesca Panetta.

FP: One other thing we did consider was whether to use old-fashioned media manipulation techniques from film such as [Adobe] Premiere to distort the real archive to make it seem like the lunar lander crash. Do the astronauts make it to the Moon? Or do they just not make it back? The most likely scenario was that the astronauts would stay on the moon. This kind of cheap-fake technique of manipulating archival media is an old and current media manipulation technique that is more common, in terms of deception, than deepfakes. The piece starts very straight then becomes manipulated with traditional ways, then manipulated with AI. Conceptually, using all those different techniques made sense.

S&F: In terms of how realistic the deepfake is in your piece, can you talk a bit about how you decided on the extent to which you wanted to make it seem real or fake?

HB: We didn’t want to be a purveyor of misinformation, we wanted to have ethical considerations running through this. The thinking we arrived at was, we should use the most effective, modern technologies available and create something that is as convincing as possible in the moment, then try to surround that with context—that is the “pulling back of the curtain.” We wanted to give people this emotional, effective journey when they’re in the piece, and then afterwards jump into a discussion of it being fake and how we did it, and why this is significant.

S&F: How concerned are you both about this technology?

HB: We had a discussion with a large video platform about deepfakes and what they’re doing about them. They basically said, they’re there, but we have so many other problems that are so much bigger as far as misinformation goes, they’re not a big part of the picture. In some sense, we’re ahead of a curve and we don’t know where that curve will go, whether there will be a tipping point where the ability to create deepfakes is automated to such an extent that they do become as easy as cheap fakes. With all the AI out there, imagine a system where I could say, create a video of Francesca Panetta ranting about hating puppies. Then, it would know who she is and how to create that video and I could post it. We’re trying to get out in front of it and signal some concerns.

But synthetic media is a tool like any other and it can be used for positive things. It has lots of entertainment possibilities and medical possibilities with voice regeneration. We see our piece as a positive use of synthetic media as well, and that’s one of the messages we hope to get across. This technology is easy to demonize but it can be used in prosocial ways.

FP: We’re concerned about misinformation, which is coming in many forms: from chatbots, to text messages, to videos. Deepfakes are at the moment a small part of these. They’ve often been talked about in the press as something outside different kinds of media manipulation, but as this exhibition is trying to show, we should consider this as a landscape where deepfakes are one more part. One of the most troubling parts of this landscape of misinformation is an increasing level of distrust in any media.

There is a term called the Liar’s Dividend which explains the phenomena where, because any media can be said to be a fake, you can deny that anything is real. I could upload a truthful video and people could say, that’s a deepfake. That is much more dangerous than a deepfake itself.

S&F: The way you’re describing it, it sounds like the problem is as much a social phenomenon as something that’s resulting from a new technology.

FP: We interviewed a scholar, Danielle Citron, and she said, “we’re the bug in the system.” That rung true to us. The technology is enabling more, different types of misinformation but we are the bug in the system.

♦

Deepfake: Unstable Evidence on Screen, is on view at Museum of the Moving Image through May 15, 2022. It is organized by Barbara Miller, MoMI’s Deputy Director for Curatorial Affairs, and Joshua Glick, Assistant Professor of English, Film & Media Studies at Hendrix College and a Fellow at the Open Documentary Lab at MIT. Accompanying the exhibition is a film and public program series called “Questionable Evidence: Deepfakes and Suspect Footage in Film,” which expands upon the themes the exhibition highlights.

TOPICS