Machines are scary. Whether Decepticons, Terminators, or Master Control Programs, these heartless beings manifest our worst fears about technology. But while the metal-clashing visions of robots run amok in the Transformers movies look overblown, the actual research of scientists and theorists currently working in areas of artificial intelligence suggest a future inhabited by omnipotent machines is not entirely far-fetched.

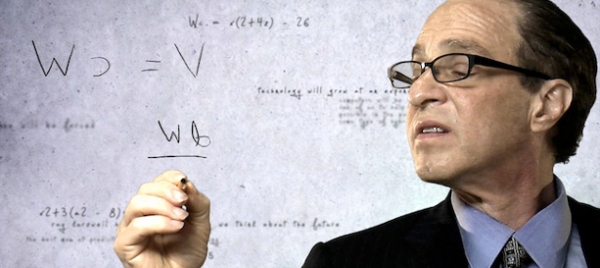

In a new documentary called Transcendent Man (currently playing in single-night engagements around the country), Ray Kurzweil, inventor of the flatbed scanner and musical synthesizer, among other innovations, claims that in just 20 years computers will be able to match, and shortly thereafter, even supersede human intelligence.

Directed by first-time feature filmmaker Barry Ptolemy, a sci-fi fan who worked on E.T., the documentary examines Kurzweil's prophetic ideas as well as his personal motivations—having to do with he and his father's mortality—but the film largely raises more questions about the science than it answers.

Kurzweil's chronology of the way life will evolve is, in fact, often disputed. "He's completely off in the timing," counters Wired co-founder Kevin Kelly in the documentary. But many computer and cognitive scientists don't immediately write off Kurzweil's fundamental belief in the Singularity—a future moment of wide-reaching technological change that will irrevocably transform human life.

Noted cognitive scientist Douglas R. Hofstadter, author of Gödel, Escher, Bach, called Kurzweil's theories "an intimate mixture of rubbish and good ideas" in a 2007 interview with American Scientist. While Hofstadter wants to dispel what he calls their "murky" science, he admits, "I don't have any easy way to say what's right or wrong."

Kurzweil believes in the inevitability of major technological breakthroughs in genetics, nanotechnology, and robotics that will pave the way for the Singularity. But contrary to dystopian sci-fi depictions, such as the Decepticons' assaults on planet Earth, Kurzweil imagines artificial intelligence and cyber-enhanced humans working together to defeat disease and death. But some say Kurzweil’s unusually rosy attitude ignores the considerable obstacles still facing today's AI scientists.

Kurzweil is a proponent of reverse-engineering the human brain. He suggests a "complete map of the human brain" will be possible within 30 years, through advances in neuron modeling and brain scanning. By mimicking the brain closely enough, he contends, we will be able to create a machine that is both conscious and intelligent, because we are conscious and intelligent.

While many scientists agree that the complexity of the human brain is fundamentally no different from a "machine complexity" that we could develop someday, other researchers have reservations about this neuroscience-based approach.

"Neuroscience is still unable to provide a clear and direct explanation as to how the microcircuitry of the brain actually functions," says Hugo De Garis, a cognitive science professor and director of the Artificial Brain Lab at Xiamen University in China. "We know that the basic circuitry is the same all over the human cortex, but just how the circuitry works is still largely unknown."

Or as Eliezer S. Yudkowsky, co-founder and research fellow of the Singularity Institute for Artificial Intelligence, says, "Simulating the brain is the stupid way to do it. It's like trying to build the first flying machine by exactly imitating a bird on a cellular level."

A second approach, based in engineering rather than neuroscience, is called Artificial General Intelligence (AGI), which pieces together information from neuroscience, cognitive science, computer science, theories of algorithms, and the philosophy of the mind to engineer an overall architecture for AI.

Ben Goertzel, co-editor of the book Artificial General Intelligence, and a 20-year veteran of AI research and development, says scanning the detailed functioning of the brain as a way of replicating how it operates may eventually work, but he believes an integrated AGI approach could happen sooner. "It's just software code," he says. "It's just a matter of how to write the right code. That could prove a very thorny intellectual problem, which takes longer to build than brain scanners, or it could happen faster, in 10 years or less."

Likewise, Benjamin Kuipers, a professor of computer science and engineering at the University of Michigan who supervises an Intelligent Robotics research group, says it may be possible to create human-level intelligence by reproducing the brain's processes at a higher level of organization than the neural pathways themselves. Kuipers cites simulators that have been created to reproduce the original 1946 ENIAC (Electronic Numerical Integrator and Computer), the world's first computer, "that don't try to replicate all those vacuum tubes, but emulate their logical functions—data structures and algorithms—using instructions implemented in modern VLSI chips," he says, referring to today's “very-large-scale integration” circuits.

But Kuipers doesn’t agree with Kurzweil or Goertzel about when human-level AI will become possible, if ever. “It seems safe to say that getting there will require some amount of scientific revolution, major or minor," he says. "Therefore, there are at least some important questions we do not yet know how to ask."

Prominent philosopher Daniel C. Dennett, co-director of Tufts' Center for Cognitive Studies and author of Consciousness Explained, goes further. He believes the Singularity is theoretically possible but practically improbable. "It is also possible in principle for roboticists to design and build a robotic bird that weighs less than eight ounces, can catch insects on the fly and land on a twig," he says. "I don’t expect either possibility to be realized, ever, and for the same reason in each case: It would be astronomically expensive of time, energy, and expertise, and there would be no good reason for doing it."

One main sticking point for AI research is the idea of consciousness or emotion—vague concepts that aren't easily quantifiable or scientifically proven but are essential for creating a supermachine because, many scientists claim, feelings are integral to handling our thoughts.

Many scientists agree that computational speeds will reach and perhaps even exceed that of the human brain's built-in processor (1016 operations per second), but quantity doesn't necessarily mean quality. As Stan Franklin, University of Memphis computer science professor and author of Artificial Minds, says, "I have no belief that mere numbers are going to become intelligent or conscious. Just looking at what goes on in the small fractions of a second in our cognitive cycles, it makes it hard to believe that somehow, out of nowhere, sentience is going to appear."

In other words, there may be a Chevy Camaro in the future that can transform into a robot, but the chances that that machine can actually feel and express sadness or devotion or fear—e.g., Bumblebee, the Autobot, in the Transformers movies—are another matter, altogether.

"This computer analogy of the brain doesn't capture the reality of being, related to emotions and feelings," says Bijan Pesaran, an assistant professor of neural science at NYU. "And we don't know how to implement those in a computer."

While Pesaran notes that there have been advances in the development of machines that can engage our emotional selves and elicit sympathy, the machines themselves do not have feelings; they only feign emotional states.

And if the way we solve problems is inextricably linked to our emotional processes, Pesaran argues, "linking those two things together is definitely going to hold back our approach to the Singularity." Thus, we could be in a situation with machines that are really smart, but "emotionally dumb," he explains. "They're going to be, in a sense, crazy machines, which is going to be a problem that we will be responsible for—even before they surpass us."

It's at this point that many scientists and researchers start to traffic in dystopian notions, contrary to Kurzweil's hopeful outlook. Pesaran considers such machines a threat. His NYU colleague, Davi Geiger, an associate professor of neural science, agrees, in a sense. "It is totally plausible that computers will be better prepared to survive the world of tomorrow than we are, and so they may represent the new species," he says.

The Singularity Institute's Yudkowsky conceives of two options for humanity at that pivotal juncture. Either we build an AI to such high standards of precision with reasonably altruistic intentions that "we all live happily ever after"; or if the first AI is sloppily produced and is just smart enough to improve itself, "my guess," he says, "is that rather than have an exciting final conflict between good and evil, in which the heroic outnumbered resistance fights an army of robots with glowing red eyes, we all just go squish."

Yudkowsky, of course, is referring to the Terminator movies, or most sci-fi plots, from Battlestar Galactaca to Star Wars to I, Robot, which visualizes a grand battle between good humans and monstrous sentient machines—a scenario that most scientists agree is a load of hooey.

Perhaps more plausible, though far less cinematic, is a situation in which a super-intelligent AI will simply be uninterested in the human species—as a person might feel about an ant or a bacterium.

For a more intelligent rendering of AI than what appears in the special-effects-driven action-adventures of Hollywood, Ben Goertzel recommends Andrei Tarkovsky's Solaris, based on Polish writer Stanislaw Lem's 1961 novel. "It really makes the point that intelligence doesn't necessarily mean human intelligence," he says. "We may have a massive intelligence which we don't even know what it's doing."