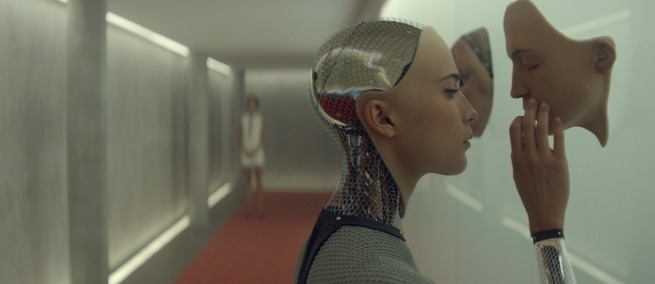

Meet Ava, the latest—and prettiest—incarnation of our culture’s longtime fascination with and fear of artificial intelligence. In Alex Garland’s Ex Machina, Ava (Alicia Vikander) is the creation of CEO-genius–madman Nathan (Oscar Isaac), a reclusive inventor who invites a young programmer Caleb (Domhnall Gleeson) to take part in a “Turing Test” to see if his lovely invention can pass as a human being. Like its predecessors, 2001: A Space Odyssey or The Terminator franchise, Ex Machina posits the notion that a highly functioning computer system may not be necessarily benevolent to mankind. But unlike those that came before it, the new film suggests humans can’t be trusted much, either.

Sloan Science and Film spoke with Dr. David J. Freedman, an Associate Professor of

Neurobiology at the University of Chicago and member of the Center for Integrative Neuroscience and Neuroengineering Research, about how brains and machines learn and process data, if computers can attain consciousness, and, if they could, what the implications might be.

Sloan Science and Film: Can you explain your specific area of research?

David Freedman: My lab is interested in understanding patterns of activity in neural circuits in the brain involved in processing visual information. So if you look at early stages of visual processing what you’ll find in the brain are basic representations of visual features, like the edges of objects in the visual world, color and direction of motion. We want to understand how learning and experience changes the way these features are represented and to understand how new memories are formed and how new memories are stored in neural circuits. We do this by monitoring and directly recording electrical signals of the brain during such behaviors as decision-making, learning, and recognition tasks.

SSF: Everything you describe are things that needed to be considered when designing Ava, from learning and knowledge acquisition to how to process visual data, like her ability to understand Caleb’s “micro-expressions,” for example. In terms of your understanding of current technologies, can computers replicate those processes?

DF: There’s been a longstanding effort in the field of artificial intelligence and computer learning to create a computer-vision system that is general purpose, that can recognize images and objects in the same way that a human observer does. But these efforts have been frustrating. It has proven to be difficult to create computer vision systems that can identify objects in situations that we as humans don’t have trouble with, but are devastating to machine-learning systems. For example, we’ll often view an object from different vantage points. You might look at someone from the side or the back and have the ability to recognize a familiar person from different viewpoints. Or we’ll see a face that’s partially occluded by some other object, but we can still recognize that person. This is difficult for computer-vision systems. The most straightforward approach for a computer is to compare the pixels that are in the two images. If there’s a light change or transformation, however, those pixel images won’t match. So we still need to come up with more sophisticated systems that allow for more flexible and high performing recognition. In the machine-learning world, they’re making rapid progress, at least for simple tasks. We see the results of this on the Internet, with some image-search tools that work really well, and Facebook can often recognize the face in an image without you telling it who it is. And these advances have come about through taking what we’ve learned about how the brain processes visual information, with the computer software using the same kinds of algorithms that the brain seems to use.

SSF: So could this easily be improved with more data?

DF: More data about the brain, actually. The brain remains incredibly mysterious. We’re learning more about it all the time, but we’re still quite far away from getting an accurate circuit diagram for how information flows throughout the brain, and also we’re pretty far from being able to write down equations for the information processing that’s actually happening in the brain. And understanding how the brain achieves everything is thought to be the most promising avenue for getting computers to do this, as well.

SSF: How do brains process information differently from computers?

DF: One of the most important specifications on a computer system is how fast the processor runs. It used to be measured in megahertz. Now it’s measured in gigahertz—we’re talking about millions or billions of cycles per second, which means that the computer processor is running at this enormously fast rate. But the brain works in a very different way. The actual cycle rate is much slower, something closer to 100 cycles per second. So we’re talking about a difference of 100 cycles vs. millions or billions of cycles per second. How does that fit that a human can perform recognition or decision-making tasks which are not possible for a computer? It’s because computers are running very quickly, but they’re processing one thing at a time, in a series, whereas the human brain is processing in parallel. So to take the visual system as an example, the brain is processing visual features that you’re looking at, at all positions, simultaneously, rather than processing, for example, the top left first and moving across, like a typewriter. The brain processes the image all at once, in parallel.

SSF: In Ex Machina, one of the sources of Ava’s intelligence is the film’s equivalent of Google. Are the algorithms involved in Google Search closer to the way the brain operates?

DF: The data you can get from a search engine is extremely useful for creating an artificial intelligence system, because you can extract a massive database of images of a particular object, say the Eiffel Tower. If you searched for images of the Eiffel Tower in a search engine, you could use that database of images to train a computer-vision system to then be able to recognize new images of the object. If you imagine taking all the databases that you can generate with a search engine, you could potentially train a learning system to classify almost any image, or even strings of text. This is the approach taken by many machine-learning algorithms. So from that standpoint, massive databases are essential for allowing an Artificial Intelligence to learn to classify things. That’s, in part, what’s being portrayed in the movie. The other part is that you could monitor individual users’ behavior patterns and track their own search engine use, and then you could get insights on the thought-processes of individuals.

SSF: So once you have a highly functioning A.I., the big question posed by the film is could it have emotion or consciousness? What do you think?

DF: This has been a subject of interest for philosophers and scientists for centuries, and continues to be a subject of lots of debate. My own view on this, as a neuroscientist who is trying to understand mechanisms in the real brain, is that we are uncovering new mechanisms every day as to how we recognize objects, make decisions, learn from our experiences and store our experiences as new memories, and how emotional factors impact new learning. And the more we learn about this, the more complete our understanding is becoming about how the brain operates and gives rise to more complex behaviors. And there’s nothing that we’ve encountered that leads us to think that there’s anything that can’t be explained in the brain. So by the same logic, if we can implement all of the things that we understand about the brain into a computer system, I don’t see any reason why the computer system couldn’t also be able to reproduce all of the functions that we’re learning about in the brain, and that would extend to simpler functions, like vision, to more complex functions, like decision-making, emotion, and maybe even consciousness.

SSF: The film raises an interesting question, about whether that consciousness would be merely “simulated” in a computer, or could it be called real in the same way as we think about it for humans.

DF: Once our understanding advances far enough, it might be possible to create an exact duplicate of someone’s brain that is exactly identical, maintaining all the connections between the neurons, which would transfer all the knowledge of the original brain to this duplicate brain. Then if you could transplant that new brain into a person, you wouldn’t say that new entity is having a simulation of consciousness. By the same token, if you got these processes working in a computer system, and it showed the same behaviors, the assumption would be that you’re witnessing real consciousness, not a simulation.

SSF: Is it theoretically possible to manufacture something analogous to the human brain as we see with Ava?

DF: There’s nothing that we’ve come across in studying the brain at the molecular level, the cellular level, or the systems and circuits level, that suggests there is some secret sauce that’s not possible to duplicate. Every cell in the brain could be broken down into its constituent parts. Increasingly, the field of molecular biology is able to create sequences of RNA and DNA. We’re just at the early stages of this new field of molecular engineering, but they could eventually fabricate any sequence of molecules they want. We’re very far away from that, but it’s possible.

SSF: So what is it specifically about the brain that we don’t understand?

DF: There are huge unknowns, at many different levels. At the level of trying to understand circuits of neurons, and how they are connected to one another, and how activity allows information to be processed, we’ve learned a lot about very specific operations, in tiny circuits. But that’s only a first step. There are something on the order of 10 to 100 billion neurons in your brain, and those are the cells that are interconnected to one another, and that number is staggering. So the gap in our knowledge is bridging what happens in the individual brain cell, and making the leap to understanding how many cells interact. That goes for complex systems, in general. For example, we still can’t predict the path of a flock of birds or have a reliable 10-day weather forecast.